Doug Kerr

Well-known member

We read of two seemingly-antagonistic aspects of an antialising filter:

Thus it would seem that the antialising filter should not attenuate any frequencies we could successfully capture anyway. It should be all benefit and no harm. But we hear otherwise.

The key to this conundrum is in the three letters C-F-A - the use of a color filter array (CFA) for color imaging in most of our cameras.

Before we see how this happens, let's first practice our thoughts on the simpler case of a monochrome (B&W) sensor camera.

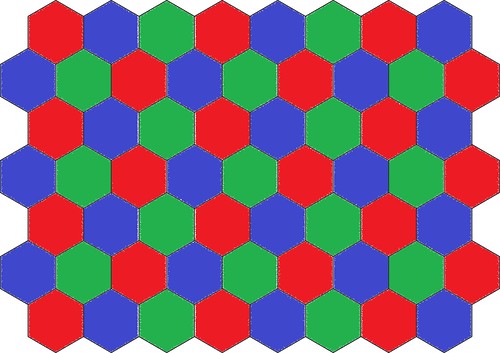

Figure 1. Monochrome sensor

The left-hand panel of figure 1 shows a section of a monochrome sensor, with photodetector (pixel) pitch p. The use of the adjacent squares suggests that the monochrome photodetectors have an intake area as large as that pitch allows ("100% fill"). That in fact is of no importance to what I will speak of here, but we might as well assume it.

We often here that the Nyquist frequency for a sampling pitch p is 0.5/p. But, unlike, for example, the sampling of an audio waveform, here we sample a 2-dimensional variable (or image). As a result, the sampling pitch, and thus the Nyquist frequency, could be different for variations in illuminance in different directions.

In the left panel we see what determines the sampling pitch in the x, y, and 45° diagonal directions. The right hand panel shows the variation in Nyquist frequency in polar coordinates: the distance to the curve, in any direction from the center, indicates the Nyquist frequency for variations in illuminance along that direction.

Note here that for the x or y directions (to which we pay the most attention), the Nyquist frequency is 0.5/p. But for a 45° diagonal direction, the Nyquist frequency is 0.707/p.

In any case, if we consider the x and y directions, to avert aliasing, we would want an antialising filter whose frequency response (MTF) has "rolled off" considerably by a frequency of 0.5/p (the Nyquist frequency). But no frequencies above that could be captured anyway, so this filter confers its benefits with no real disadvantage.

Now, we consider our infamous color filter array (CFA) sensor, using a similar figure:

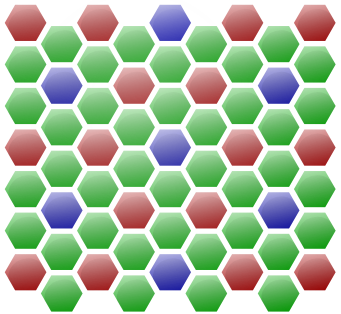

Figure 2. Color filter array (CFA) sensor (Bayer pattern)

Here the left panel shows the Bayer pattern of R, G, and B photodetectors. Again the photodetector (sensel) pitch is p, and the figure suggests 100% fill.

We note the x, y, and 45° diagonal sampling pitches for some of the "color layers". On the right, we show the resulting Nyquist frequencies in polar coordinates, for any direction of the illuminance change.

Note that, for the x and y directions, to which we pay most attention:

Now, the punch line.

In order to avert aliasing in the R and B layers, we must use an antialiasing filter whose response (MTF) has rolled off considerably by a spatial frequency of 0.25/p.

But given that the Nyquist frequency of our digital image is 0.5/p, we hope to be able to capture spatial frequencies in the image up to almost that. The antialising filter, having "rolled off" by about half that frequency, seriously interferes with our resolution hopes.

And that's what all the palaver is about.

Best regards,

Doug

• We must have one, else we will experience the phenomenon of aliasing in our images (notably the type that manifests as "color moiré").

• By its nature, an antialising filter degrades the resolution of the image.

If we consider some basic principles of the theory of representation of a continuous variable by sampling, this seems paradoxical.• By its nature, an antialising filter degrades the resolution of the image.

• We get (consequential) aliasing if there are frequencies present in our image (with any consequential amplitude) at or above the Nyquist frequency for our sensor (which is determined by its sampling pitch).

• An antialising filter averts this by having a frequency response (MTF) that rolls off to a low value by the time we reach the Nyquist frequency, thus eliminating any troublesome frequencies before the sampling is done.

• And in any case, we can't successfully capture frequencies in our image at or above the Nyquist frequency anyway.

• An antialising filter averts this by having a frequency response (MTF) that rolls off to a low value by the time we reach the Nyquist frequency, thus eliminating any troublesome frequencies before the sampling is done.

• And in any case, we can't successfully capture frequencies in our image at or above the Nyquist frequency anyway.

Thus it would seem that the antialising filter should not attenuate any frequencies we could successfully capture anyway. It should be all benefit and no harm. But we hear otherwise.

The key to this conundrum is in the three letters C-F-A - the use of a color filter array (CFA) for color imaging in most of our cameras.

Before we see how this happens, let's first practice our thoughts on the simpler case of a monochrome (B&W) sensor camera.

Figure 1. Monochrome sensor

The left-hand panel of figure 1 shows a section of a monochrome sensor, with photodetector (pixel) pitch p. The use of the adjacent squares suggests that the monochrome photodetectors have an intake area as large as that pitch allows ("100% fill"). That in fact is of no importance to what I will speak of here, but we might as well assume it.

We often here that the Nyquist frequency for a sampling pitch p is 0.5/p. But, unlike, for example, the sampling of an audio waveform, here we sample a 2-dimensional variable (or image). As a result, the sampling pitch, and thus the Nyquist frequency, could be different for variations in illuminance in different directions.

In the left panel we see what determines the sampling pitch in the x, y, and 45° diagonal directions. The right hand panel shows the variation in Nyquist frequency in polar coordinates: the distance to the curve, in any direction from the center, indicates the Nyquist frequency for variations in illuminance along that direction.

Note here that for the x or y directions (to which we pay the most attention), the Nyquist frequency is 0.5/p. But for a 45° diagonal direction, the Nyquist frequency is 0.707/p.

In any case, if we consider the x and y directions, to avert aliasing, we would want an antialising filter whose frequency response (MTF) has "rolled off" considerably by a frequency of 0.5/p (the Nyquist frequency). But no frequencies above that could be captured anyway, so this filter confers its benefits with no real disadvantage.

Now, we consider our infamous color filter array (CFA) sensor, using a similar figure:

Figure 2. Color filter array (CFA) sensor (Bayer pattern)

Here the left panel shows the Bayer pattern of R, G, and B photodetectors. Again the photodetector (sensel) pitch is p, and the figure suggests 100% fill.

We note the x, y, and 45° diagonal sampling pitches for some of the "color layers". On the right, we show the resulting Nyquist frequencies in polar coordinates, for any direction of the illuminance change.

Note that, for the x and y directions, to which we pay most attention:

• For the G layer, the Nyquist frequency is 0.5/p, just as would be suggested by the photodetector pitch (and the pixel pitch of the digital image itself).

• However, for the R and B layers, the Nyquist frequency is 0.25/p, not that suggested by the photodetector pitch, or that of the digital image itself, which would be 0.5/p. It is half that value.

• However, for the R and B layers, the Nyquist frequency is 0.25/p, not that suggested by the photodetector pitch, or that of the digital image itself, which would be 0.5/p. It is half that value.

Now, the punch line.

In order to avert aliasing in the R and B layers, we must use an antialiasing filter whose response (MTF) has rolled off considerably by a spatial frequency of 0.25/p.

But given that the Nyquist frequency of our digital image is 0.5/p, we hope to be able to capture spatial frequencies in the image up to almost that. The antialising filter, having "rolled off" by about half that frequency, seriously interferes with our resolution hopes.

And that's what all the palaver is about.

Best regards,

Doug

Last edited: