Doug Kerr

Well-known member

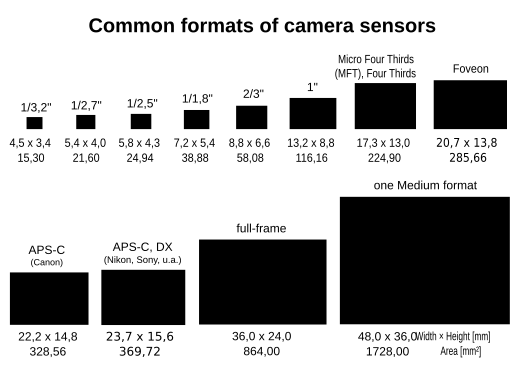

It is generally accepted that, to speak rather imprecisely, a camera with a larger sensor offers the potential of "better image quality" than one with a smaller sensor.

This should come as no surprise. Information theory teaches us that information and energy are intimately related. For a given scene (with a certain distribution of luminance), assuming an aperture of f/2.8 and a shutter speed of 1/200 sec, a 43 mm sensor ("full frame 35 mm") receives about 31 times the energy in an exposure than the 7.7 mm sensor in my new Panasonic Lumix DMC FZ200.

But, in a more specific way, just how does this happen?

Well, the larger real estate of the larger sensor can be deployed in two ways (between which the designers must trade off):

• More sensels, and thus greater potential for image resolution.

• Greater sensel area, and thus the potential for better signal-to-noise ratio at any sensor luminance.

Now, in processing the sensor output, we can apply algorithms that improve the "noise" behavior of the image, generally at the expense of deterioration of some other image quality property. Some of this happens in the camera, and some of it may happen in post-processing.

Now, let's look at actual happenings. We might expect the image from a camera with a 43 mm sensor (perhaps a Canon EOS 6D) to be "better in overall quality" than an image from a camera with a 27 mm sensor (perhaps a Canon EOS 70D).

But in just what way? How does this difference manifest itself, for example, on a print at a size of 12" x 8"? What is the difference that the viewer observes? And is the impression of a "better image" in part because of properties that the viewer may not be able to recognize but which nevertheless affect the overall "niceness" of the image?

Best regards,

Doug

This should come as no surprise. Information theory teaches us that information and energy are intimately related. For a given scene (with a certain distribution of luminance), assuming an aperture of f/2.8 and a shutter speed of 1/200 sec, a 43 mm sensor ("full frame 35 mm") receives about 31 times the energy in an exposure than the 7.7 mm sensor in my new Panasonic Lumix DMC FZ200.

But, in a more specific way, just how does this happen?

Well, the larger real estate of the larger sensor can be deployed in two ways (between which the designers must trade off):

• More sensels, and thus greater potential for image resolution.

• Greater sensel area, and thus the potential for better signal-to-noise ratio at any sensor luminance.

Now, in processing the sensor output, we can apply algorithms that improve the "noise" behavior of the image, generally at the expense of deterioration of some other image quality property. Some of this happens in the camera, and some of it may happen in post-processing.

Now, let's look at actual happenings. We might expect the image from a camera with a 43 mm sensor (perhaps a Canon EOS 6D) to be "better in overall quality" than an image from a camera with a 27 mm sensor (perhaps a Canon EOS 70D).

But in just what way? How does this difference manifest itself, for example, on a print at a size of 12" x 8"? What is the difference that the viewer observes? And is the impression of a "better image" in part because of properties that the viewer may not be able to recognize but which nevertheless affect the overall "niceness" of the image?

Best regards,

Doug