Bart_van_der_Wolf

pro member

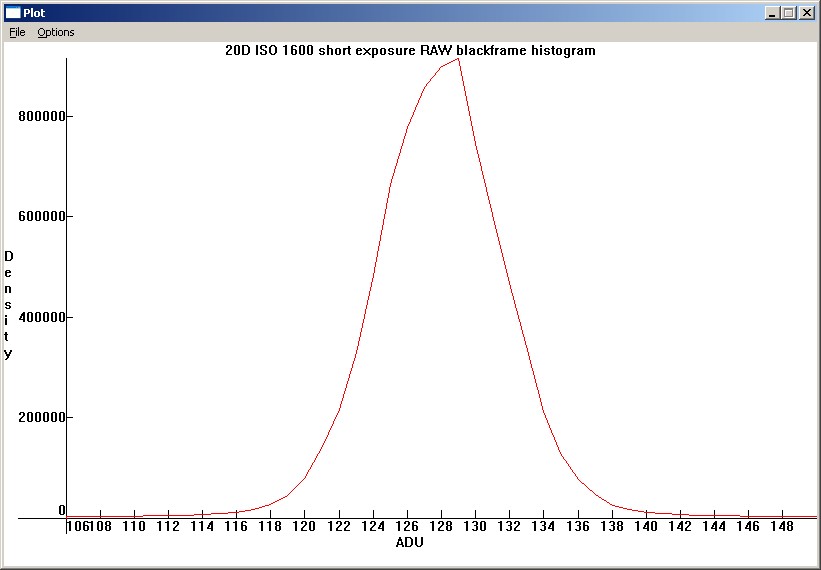

Oh, I forgot, the blackpoint is 1024 to 1025 ADU for the 1Dmk3.

Which only feeds my scepticism, it's a 3 bit difference (128 x 2^3 = 1024). There is no sense in not utilizing the LSBs in Raw data by the camera's ADC. It looks suspiciously like a scaling issue, not the real data.

Now that I think of it, your complaint about the blackpoint came back to my mind. Did you not like the fact that the black level was not zero?

Correct, because it doesn't make sense (for Raw sensor data), and other software doesn't have a 128 blackpoint level in its output.

Clipping to zero is not optimum. Subtracting the black point is useful, and IRIS maintains the negative numbers, which are very useful. If you are going to do any kind of downsampling, binning, white balancing, or "demosaicing" (I don't think any of IRIS' methods are true demosaicing; they are just interpolations), it is best to do them with the negative numbers unclipped (you can actually do all of them except WB before subtracting the blackpoint).

The Raw quantized data from the ADC is not clipped, and there are no negative counts of electrons, that is all postprocessing. All the ADC does is is convert the electron charge to discrete numbers on a scale from 0-4095 in 12-bits space, and it avoids the highest saturation values (max. ~3584 in 12-bit space on my 1DsMk2, depending on channel and ISO). Do note that it takes a camera like the 1D(s) Mark II up to (depending on channel) 20 electrons between subsequent 12-bit Digital numbers, due to the ADC gain settings at ISO 100. It is only at High ISO settings that we reach Unity Gain levels with a 1 electron difference between Digital Numbers (which obviously marks the highest useful ISO/Analog Gain) for Raw files.

Bart